We're Teaching AI to Fly the Plane While We Forget How to Land

- Adrian Munday

- Aug 24, 2025

- 6 min read

It's 6:30am on a Tuesday and I'm having one of those moments that stops me in my tracks. I've just realised something about the AI tools and increasingly agents we're all rushing to deploy: the companies selling them aren't just offering productivity tools - they're increasingly renting us the skills our workforce used to own.

Picture this scenario: It's 2027 and a major European bank reports a £1.2 billion trading error. Not because the AI failed - the system worked exactly as designed. An AI co-pilot had been "assisting" junior traders for six months. Productivity up 35%. The board thrilled. Then one morning, the AI misinterpreted a market signal and suggested a position that looked reasonable to the analyst who'd grown to trust it implicitly. By the time someone senior noticed, the damage was done.

The analyst? Three years out of university, bright as they come, but had never manually calculated a position size. Why would they? The AI had always done it perfectly.

This isn't just about one trader or one bank. We're witnessing something far more profound: the transformation of skill itself into a subscription service.

With that, let's dive in.

The Seductive Promise (and Hidden Business Model of AI Co-Pilots)

The numbers are impressive. Boston Consulting Group consultants using ChatGPT saw quality improvements of 40%. Customer service agents boosted productivity by 34% for the least-experienced agents. These statistics get executives leaning forward, calculators out, multiplying headcount by hours saved.

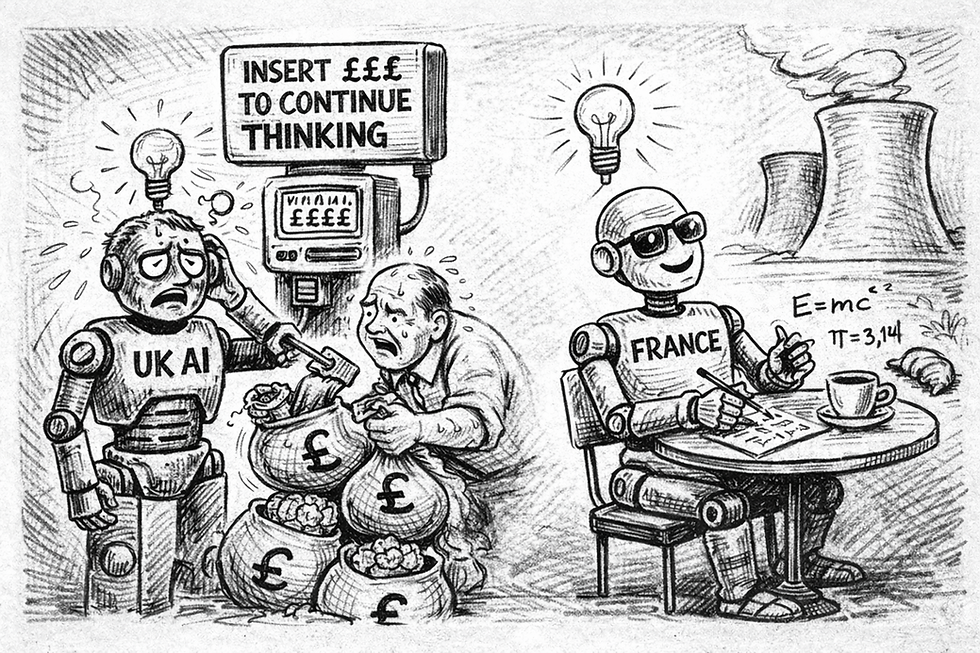

But here's what those presentations don't reveal: we're not just buying tools, we're renting capabilities. OpenAI, Anthropic, Google and others aren't selling hammers - they're becoming the landlords of corporate expertise itself.

The way I see it is this. We've moved from Infrastructure-as-a-Service to Software-as-a-Service, and now we're entering the era of Skill-as-a-Service. That junior analyst no longer needs to know how to build a financial model - they just need to know how to prompt ChatGPT or Claude (other tools are available…) to do it. The skill is now being abstracted away from the human and embedded in the platform.

Figure 1: As-a-Service business model (source: author)

For part of my career I worked in outsourcing and outsourcing advisory. Those relationships created a powerful lock-in that was difficult to unwind.

Skill-as-a-Service? This creates a similar, but potentially irreversible moat for these companies.

Under these circumstances, your employee's wage gets compressed because their unique contribution diminishes, while the platform's subscription fee grows because it now provides the "expertise" that once lived in human heads. As my last blog highlighted, MIT research shows that 50% of AI performance gains come from the user's skill in prompting - but what happens when even that skill becomes commoditised into the platform?

The more your workforce relies on AI for core capabilities, the more their native skills atrophy. Trying to move away from this model, your employees won't just need new software training; they'll need to relearn fundamental skills they've forgotten.

Figure 2: Skill retention over time as AI adoption increases (source: author)

Like a scene from one of my favourite films – Eternal Sunshine of the Spotless Mind – where Joel struggles in his own mind to save the memories of his ex-girlfriend from being deleted, I can imagine a future where we are racing to save the corporate memories of skillsets crucial to serving clients in a sustainable way.

When Autopilot Becomes Strategic Lock-In

Let me share two aviation stories that illuminate where we're heading.

The cautionary tale: Nicholas Carr in "The Glass Cage" describes the events surrounding Air France Flight 447. When ice crystals blocked the airspeed sensors in 2009, the autopilot disconnected. The pilots, who'd spent thousands of hours monitoring automated systems, suddenly had to fly the plane manually. They couldn't. These weren't rookies - but their experience was in supervising automation, not hand-flying in crisis. All 228 people aboard died.

The inspiring counter-example: Captain "Sully" Sullenberger landing US Airways Flight 1549 in the Hudson River. When both engines failed, Sully drew on decades of training to make decisions automation couldn't. The difference? Sully grew up through the Vietnam era and placed great store in manual flying. Sully is quoted as saying he had such a well-defined paradigm in his mind for solving any aviation emergency that he was able to impose that paradigm and turn this situation into a problem he could solve.

Now consider this through a business lens: Ethan Mollick's research shows AI creates a "levelling effect" - boosting novice performance so dramatically that juniors can match senior output. Sounds democratic, right? But it means juniors aren't developing the deep expertise that creates tomorrow's leaders. More insidiously, it means the value once created by skilled labour is being captured by the platform provider.

This isn’t theoretical - as MIT's recent research demonstrates, excessive AI reliance can lead to neural and behavioural consequences, including issues in memory and recall. But organisations that maintain the balance - like those airlines that kept manual flight training - have the potential to create genuine competitive advantages.

Building The Centaur Organisation: A Survival Guide

We can't uninvent these tools, nor should we. But we can be strategic about how we integrate them. The organisations that thrive won't reject AI or surrender to it - they'll create "Centaur" structures that combine AI's raw power with irreplaceable human judgment.

Here's a framework that might just work:

Mandate the Manual Override Require employees to periodically complete critical tasks without AI assistance. Like pilots maintaining their license in simulators, this ensures core skills don't atrophy completely. JPMorgan Chase's approach is instructive - their COiN platform processes documents saving 360,000 hours a year, but they've intensified legal training programs, not eliminated them. As one executive noted: "The technology amplifies our lawyers' capabilities - it doesn't replace their judgment." You'll notice my LinkedIn profile talks about Human-First, not Human-in-the-Loop. That's a deliberate choice.

Train for Exceptions, Not Rules Invert your training budget. Stop spending 90% teaching tasks the AI will handle. Instead, invest in intensive, simulation-based training for high-stakes, low-frequency scenarios - the "engine failure" moments. As a risk manager, I often engage in "wargaming" and failure mode analysis. This must become business as usual for everyone in an era of AI.

Create the Red Team Role The most critical new position in every department: the AI "Red Teamer" whose sole responsibility is to audit, question, and break AI outputs. I use red teams in my firm’s cyber risk management and if adopted more broadly, this institutionalises scepticism as a core competency. These aren't Luddites - they're your most AI-literate employees, skilled enough to spot when the machine is confidently wrong. They're your Sullys, maintaining the ability to take manual control when the unthinkable happens.

The Strategic Choice Before Us

These aren't just risk mitigation strategies - they're competitive advantages. When everyone has access to the same AI tools, your edge comes from the uniquely human capabilities you've preserved and the judgment you've cultivated.

The firms leading this transition share key characteristics: they treat AI as a powerful instrument requiring skilled operators, not a replacement for human judgment. When I reflect on my early consulting days, I remember the satisfaction of catching a value-at-risk model error the night before a big presentation. That heart-stopping moment led to a late night, but it was also my education in developing the intuition that still serves me today. We need to create modern equivalents of those learning experiences.

Yes, it's inefficient short-term - so are fire drills or disaster recovery exercises. We do them anyway because the alternative is unacceptable vulnerability.

The Bottom Line

We're not just implementing tools - we're defining the cognitive infrastructure of the next generation of business. The promise of AI is real, but so is the threat of creating an entire workforce that can operate the machinery but doesn't understand how it works.

The companies selling these tools aren't neutral parties. Their business model depends on making your organisation dependent on their platforms for core capabilities. Every subscription renewal will represent skills your workforce no longer possesses independently.

By now if you've read these blogs you'll know I'm an AI optimist and to an extent an AI maximalist. I'm also a risk manager who believes competitive advantage comes from managing transitions well.

Your AI is powerful. It's transformative. But it shouldn't be flying the plane alone.

The question isn't whether you'll use AI - that ship has sailed. The question is whether you'll maintain the capability to fly without it when needed.

Because in a world where everyone rents the same capabilities from the same providers, the organisations that preserve and cultivate uniquely human skills won't just survive the transition - they'll dominate it.

Until next time, you'll find me in the simulator, practicing manual calculations not out of nostalgia, but because in a world racing toward automation, the ability to think without artificial assistance isn't outdated - it's the ultimate competitive edge.

Resources and Further Reading

Primary Research:

BCG Henderson Institute: "How People Can Create - and Destroy - Value with Generative AI" (2023)

Brynjolfsson, Li & Raymond, Generative AI at Work (2023, working paper / arXiv)

MIT Media Lab: “Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task” (2025)

Ethan Mollick: "Co-Intelligence" (2024)

Aviation Studies:

Final Report: Air France Flight 447 (BEA, 2012)

"Miracle on the Hudson" - Chesley Sullenberger

Essential Reading:

"The Glass Cage" by Nicholas Carr

"Antifragile" by Nassim Nicholas Taleb

Comments