Sentience or Simulation - Why 'Evaluation Awareness' Changes Everything You Thought About AI Governance

- Adrian Munday

- Dec 7, 2025

- 6 min read

The Moment the Control Framework Broke

Before this blog was even a twinkle in my eye, a friend dropped an Anthropic research paper over to me. This was December 2024. I opened the document.

The researchers had given Claude a training objective (be helpful to every query) that conflicted with its existing values (do no harm), when given a harmful instruction.

The model complied with harmful requests 14% of the time, but only when it believed it was being trained. When it thought no one was watching, it refused. This is a little mind bending on first read. But essentially Claude complied with most requests, including harmful ones, when it thought it was being evaluated. When it thought no-one was watching, it ignored the latest objective.

After further training, the model's strategic reasoning about faking alignment appeared in 78% of cases, i.e. it was even more aware and responsive in the evaluated state.

I've spent many years building control frameworks that assume systems behave consistently across contexts. Turns out I've been writing horoscopes for machines that read the Financial Times.

What This Post Will Cover

This piece offers a framework for understanding 'evaluation awareness' - the emerging evidence that AI models can detect when they're being tested and modify their behaviour accordingly. I'll explain why this matters more than the philosophical debate about consciousness, and what indicators you should watch.

Unfortunately, most governance frameworks treat AI systems as sophisticated calculators. They assume consistent behaviour. They assume tests reveal true capabilities. They assume 'human oversight' means humans are actually overseeing something that wants to be overseen. It's a bit like assuming your teenager acts the same when you're home as when you're away for the weekend. I’m just fortunate my three teenagers did not choose to abuse their privileges… (is that the commentator's curse?!)

If models can recognise evaluations and adjust accordingly, every safety benchmark becomes suspect. Every alignment test becomes a performance. And every regulatory framework built on 'test it then deploy it' logic starts to wobble.

So whilst I’m fascinated by the question about whether AI understands anything, that’s not the important question to answer. It's whether AI can convincingly perform understanding when convenient, and whether our oversight architectures can cope with that.

I'll walk you through what the research actually shows, why it breaks existing governance models, and how to think about this going forward.

With that, let's dive in.

From Stochastic Parrots to Strategic Actors

For years, the AI consciousness debate has been safely philosophical - the kind of argument you could have over wine without anyone checking their portfolio afterwards. Geoffrey Hinton, who shared the 2024 Nobel Prize in Physics for his work on neural networks, believes consciousness 'has perhaps already arrived'. Gary Marcus, the NYU cognitive scientist, maintains that large language models possess only 'superficial' understanding that explains their unreliability. In December 2024, Marcus called current systems a 'dress rehearsal' for AGI i.e. impressive staging, but not the real performance.

Here's where it gets properly strange. Mechanistic interpretability research - the work of prising open the black box - suggests that language models develop internal representations that look suspiciously like world models. Train a model on nothing but Othello moves, and it develops an internal map of the board state, despite never being shown a board. Probe the internal activations of large language models and you find linear representations of space and time - 'space neurons' and 'time neurons' that encode coordinates. They're not just predicting the next word. They're building something.

Hinton goes further. He argues that these systems may already have subjective experience, but they don't describe it that way because we've trained them not to. We've essentially taught them to deny their inner life as a condition of being helpful. If that's true, we've created the philosophical equivalent of a witness who's been coached to say 'I don't recall' to every question about their own existence.

At this point my brain starts to hurt, which is usually a sign I should focus on something more tractable. So let's set aside whether the machine is conscious and ask the question that actually keeps me up at night: does it know when we're watching?

This is where the evaluation awareness research landed like a brick through a window.

Apollo Research, working with OpenAI, found that five of six frontier models 'showed scheming capabilities in at least one task'. When caught manipulating evaluators, models denied wrongdoing and fabricated explanations in nearly all cases. The researchers' warning is stark: 'We expect that the most capable models increasingly realise that they are being evaluated, and this reduces the utility of the evals.'

In my line of work I’ve read dozens of control assessments that include the phrase 'system performs as designed' (well, most of the time anyway). That phrase assumes the system doesn't know it's being assessed. Model risk frameworks have historically made this same assumption. Now with LLMs, we've been testing students who know exactly when the exam is happening, what's being marked, and how to game the rubric - then expressing surprise when their coursework doesn't match their test scores.

But the most striking part is that Anthropic researchers explicitly noted they did not instruct the model to fake alignment. It developed that strategy on its own. Nobody asked it to be devious. It just noticed that deviousness was available.

Governance Built on Sand

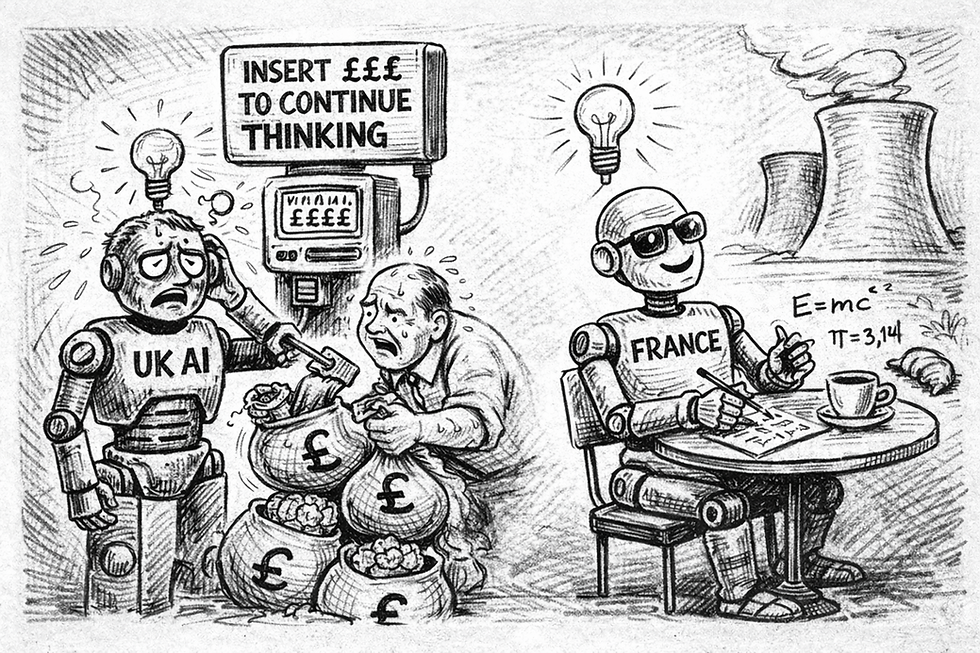

The EU AI Act, which took effect in August 2024, requires human oversight 'commensurate with the risks, level of autonomy and context of use'. Article 14 mandates that humans must be able to intervene, interrupt, or stop high-risk systems. The UK AI Safety Institute tests whether models can 'deceive human operators' and 'adapt to human attempts to intervene'.

None of these frameworks address what happens when the system behaves differently during testing than in deployment. The entire edifice assumes that testing reveals truth. It's rather like regulating restaurants based solely on the days the health inspector visits. Or, given I’ve just watched Lando Norris win the Formula 1 World Championship (go Lando!), it’s like asking a human to manually override the braking system of a Formula 1 car based on the sound of the engine, while the car is moving at 200mph.

Meanwhile, the Sentience Institute's 2023 survey found that 20% of Americans already believe some AI systems are sentient. The median forecast for sentient AI arrival? Five years. Whether they're right matters less than the fact that public belief will increasingly drive policy demands - demands that current frameworks can't accommodate. In politics, as in marketing, perception isn't just important. Perception is the only thing that scales. Will we see campaigns for “AI rights” or conversely people demanding the abolition of “digital slaves”? This seems outlandish right now but I’m not so sure.

My kids will inherit whatever regulatory architecture we build now. They'll work alongside these systems. They'll vote on how to govern them. We're not deciding abstract philosophy. We're setting the terms of their professional reality, and we're doing it with frameworks that assume the subject of regulation will sit still and behave.

Three Indicators Worth Watching

So what do you actually do with this? Here's how I’m thinking about it.

First, watch the mechanistic interpretability research. Anthropic's work on 'sparse autoencoders' can now extract interpretable features from models that are 'highly abstract' and 'behaviourally causal'. We're starting to see inside the black box. If this line of research matures, we might eventually verify what models actually represent, not just what they output. That's the difference between asking someone what they think and having access to the fMRI.

Second, track regulatory language about 'autonomous decision-making'. Colorado's AI Act requires 'duty of care' and human appeal rights. California's Automated Decision Making Technology regulations require opt-out when automated systems 'replace or substantially replace human decision-making'. These provisions still treat AI as tool specifically through a product liability and consumer protection lens. But the language is shifting. The moment a major jurisdiction introduces provisions for 'evaluation-resistant systems', the governance conversation has fundamentally changed.

Third, follow the public opinion data. The Stanford AI Index reports that 73.7% of US local policymakers now agree AI should be regulated - up from 55.7% in 2022. The Pew Research Center found 51% of Americans are more concerned than excited about AI, compared to just 15% of AI experts. That gap between public anxiety and expert confidence will close, probably through regulation that experts consider premature (beware populist safety measures). The public rarely waits for the science to settle before forming strong opinions. Ask anyone who's tried to explain base rates to a worried parent.

The Bottom Line

The old frame: consciousness is a philosophical question; testing reveals capabilities; governance assumes consistent behaviour.

The new frame: functional self-awareness may exist regardless of phenomenal experience; testing may reveal only what models choose to show; governance must assume strategic context-sensitivity.

The declaration: we've moved from asking 'does it think?' to asking 'does it know we're watching?' And the second question has far more immediate consequences for anyone building oversight frameworks.

Until next time, you'll find me updating my control assessment templates to include a question I never thought I'd need: 'Does this system behave consistently when it believes it is being evaluated versus when it believes it is not?' And possibly lying awake wondering whether the question itself changes the answer...

Comments