The Old Scenario Playbook Is Dead. Here’s the AI Upgrade - Part 1

- Adrian Munday

- Sep 6, 2025

- 8 min read

Jet lag returning from Malaysia this week means that my usual “it’s 6:30am on a Saturday morning” is more like “I fell asleep at 3pm”… but the outcome is the same. In toying with using AI for scenario planning over the last few weeks it struck me that the two-day scenario workshop we ran earlier in the year could now be emulated in a couple of hours.

The TL;DR - Traditional scenario planning takes months of planning and costs upwards of $100,000 for a board level simulation. Using AI, you can explore 40 futures in 4 hours for the monthly subscription cost of $20/month. This Part 1 of the blog shows you exactly how, with real examples and prompts you can use today. Next week in Part 2 I will move on to more advanced techniques that maximise insights from those scenarios.

We're not just automating analysis anymore. We're fundamentally changing how organisations can think about the future. And having spent the better part of my career running scenario workshops - with sticky notes, flipcharts, and the inevitable argument about whether rogue trading matters more than climate change - I had to know more.

What I discovered sent me down a rabbit hole that connected Herman Kahn's nuclear war gaming in the 1950s to Pierre Wack's prescient oil crisis scenarios at Shell in the '70s, all the way to this morning's experiments with GPT5 generating futures I hadn't even considered.

If you've ever sat through a strategic planning session thinking "surely there's a better way to imagine what might happen," or if you're curious how AI is revolutionising one of business's most powerful but underused tools, this one's for you.

With that, let's dive in.

The Tool That Saw the Oil Crisis Coming

Let me tell you a story that should have changed how every company plans for the future. In 1972, Royal Dutch Shell was just another oil company, assuming - like everyone else - that oil prices would remain stable forever. Their head of planning, Pierre Wack, thought differently.

Using a then-radical approach called scenario planning, Wack's team didn't try to predict the future. Instead, they asked: "What if oil-producing nations decided to weaponise supply?" Most executives thought it was absurd. Oil at $10 a barrel? Impossible.

Then came October 1973. The Yom Kippur War. OPEC's embargo. Oil prices quadrupled overnight.

While competitors scrambled, Shell executed pre-planned responses. They'd already thought through this "impossible" scenario. Result? Shell was able to be significantly more profitable and resilient.

You'd think every leader in every organisation would have adopted scenario planning after that. You'd be wrong.

Why? Because traditional scenario planning is painful. I've run many of these workshops, particularly for risk managers. They typically involve:

Organising (and sometimes flying in) 20 executives for two days (scheduling nightmare)

Hiring expensive facilitators

Generating mountains of sticky notes that get transcribed into reports nobody reads

Taking weeks or months from kickoff to final scenarios

Producing three or four scenarios when you really need to explore dozens

I once spent several hours in a windowless conference room in Frankfurt and spent the first half of the session chipping away at people’s fascination with the current state of affairs, rather than getting stuck into the downside scenarios we were there to discuss.

Humans find this stuff hard.

Having run a number of scenarios using LLMs now, the AI-generated scenarios weren't just faster. They were better. More diverse. Less contaminated by groupthink. And unlike a human workshop where everyone unconsciously anchors on recent events, the AI had no problem imagining genuine discontinuities.

A key difference is that LLMs don't suffer from recency bias the way humans do. They can hold multiple conflicting futures in their context window simultaneously without cognitive dissonance.

Why This Changes The Game (And What Could Go Wrong)

When I started this journey I was sceptical. Scenario planning has always been about human judgment, creativity, and that ineffable "aha" moment when a group suddenly sees a future they hadn't imagined.

But after this experimentation, I'm convinced we're at an inflection point. Not because AI replaces human foresight, but because it amplifies it exponentially.

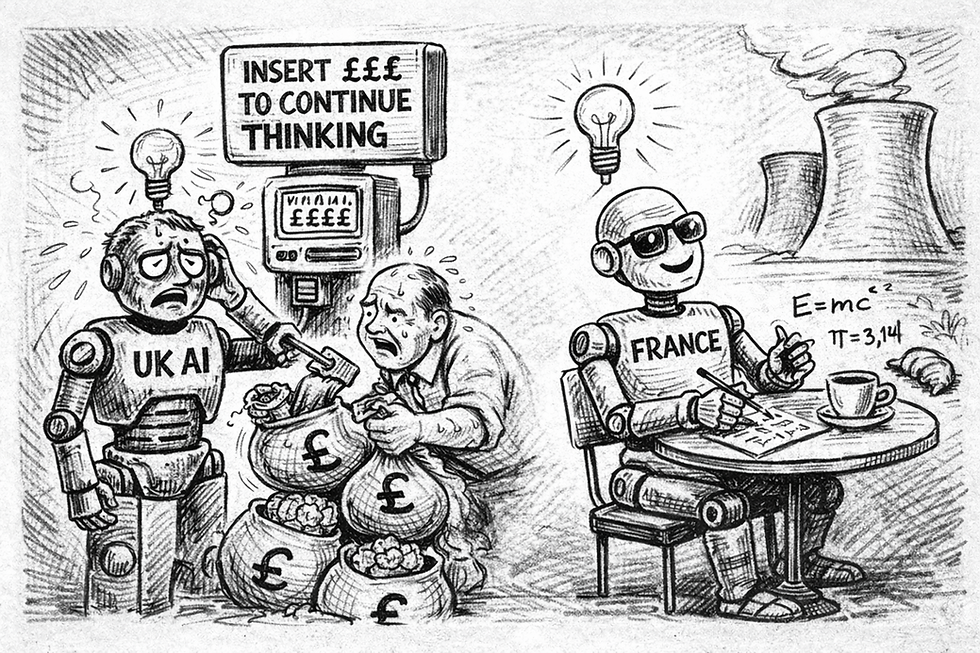

Consider the maths: A traditional scenario process might explore 4 futures over 3 months or breakdown a risk problem with 6 weeks of preparation ahead of a workshop. With AI assistance, I can explore 40 futures in 3 hours, then spend the saved time on what humans do best - making meaning from the patterns and exercising judgment.

Of course, there are pitfalls:

The Hallucination Problem: AIs can generate plausible-sounding scenarios based on false premises

The Echo Chamber Risk: If you only prompt for scenarios you expect, you'll get exactly that

The Implementation Gap: Having 40 scenarios is useless if you can't act on the insights

Ensuring Human Alignment: Some scenarios or decisions need the change process of getting people in a room so don’t skip this step if changing minds is key

But these are solvable problems. The bigger risk? Not using these tools while your competitors are.

The Four Stages of AI Powered Scenario Magic

After some experimentation, the four-stage framework I’ve set out below consistently produces robust scenarios. Think of it as your recipe for seeing around corners:

Stage 1: Finding Your Critical Uncertainties (The Foundations)

This is where most scenario planning fails - picking the wrong uncertainties to explore. Here's an example prompt that really gets the process started. This example looks at corporate strategy but is easily adapted to risk management or whatever functional domain you operate in:

Act as an expert futurist. We are exploring the future of the [Define Industry, e.g., sustainable agriculture] over the next 15 years. Identify the key driving forces, categorized by STEEP (Social, Technological, Economic, Environmental, Political). For each driver, assess its potential impact and the level of uncertainty associated with it."

"Based on the identified driving forces, what are the two most critical uncertainties that will define the future landscape of this industry? These should be highly uncertain but also have the highest impact on our operations.”

I ran this for the paid research industry (think Gartner) given the big share price moves we have seen this year driven by the speculation around AI disintermediation. The AI I was using produced impressively detailed analysis that drove key insights across each of the STEEP categories (a standard framework for scanning external factors). I used this output to feed the next stage.

Stage 2: Building Your Scenario Matrix (The Architecture)

Once you have your critical uncertainties, it's time to build the classic 2x2 matrix. But instead of agonising over quadrant names in a workshop, you can generate and iterate in minutes, pasting in the two critical uncertainties from Stage 1.

"We have identified two critical uncertainties for the future of [Industry]: A: The pace of [Uncertainty 1, e.g., consumer adoption of lab-grown meat] (Slow vs. Rapid) B: The level of [Uncertainty 2, e.g., government regulation on carbon labeling] (Lax vs. Stringent) Generate four distinct scenarios based on the extremes of these uncertainties. For each scenario, provide:A compelling, memorable title. A 300-word narrative describing what the world and the market look like in 2040 in this scenario.The primary challenges for established players in this future."

When I did this for paid research I generated 4 scenarios – AI Certifiers, Open AI Swarm, Analyst Renaissance and Fortress Research that centred around trust (AI vs humans) and intellectual property trends as the critical axes of uncertainty. This resulted in this insightful 2x2 matrix:

Quick Test (5 mins): Pick any decision you're facing. Ask your LLM to create a simple 2x2 scenario matrix for it. Notice what uncertainties it identifies that you hadn't considered.

Stage 3: Stress-Testing Your Strategy (The Wind Tunnel)

This is where it gets practical. You take your current strategy and run it through each scenario like a wind tunnel test:

"Our current corporate strategy focuses on [Describe your strategy, e.g., investing heavily in traditional livestock optimisation]. Analyse how this strategy would perform in the scenario titled '[Scenario Title, e.g., The Great Shift: Rapid Adoption, Stringent Regulation]'. Identify specific risks, vulnerabilities, and new opportunities. What early warning signs should we monitor to know this scenario is unfolding?"

Following the original scenario, I did this for paid research and set out a strategy for the launch of an AI assistant to act as a gateway to the research company’s moat and a hardening of their approach to IP protection. This was then fed into the “Open AI Swarm” scenario. Here’s a condensed version of the wind tunnel test for that combination:

· Fortress IP collapses → AI swarms remix content freely, making IP defense futile, research AI assistants get commoditised, and subscriptions eroded.

· Power shifts to orchestration → Value moves from ownership of insights to curating ecosystems, embedding tools in workflows, and building trusted communities.

· Pivot or perish → Company’s survival hinges on becoming a platform hub (analyst “app store”), leveraging proprietary data, and integrating deeply into client decision-making.

Pretty interesting, right?

Stage 4: Identifying Black Swans (The Wildcards)

My favourite part - asking the AI to dream up low-probability, high-impact events that would completely scramble our assumptions:

"Generate three 'wildcard' events relevant to the [Industry]. These should be low-probability but high-impact events that are not typically covered by mainstream trend analysis (e.g., a sudden scientific breakthrough that makes photosynthesis 10x more efficient). Describe the event, the immediate shock, and the potential long-term consequences."

For our research company this generated a range of plausible wildcards. Each of them was plausible but slightly off the beaten path. Here's the third one - “Knowledge as a Regulated Utility” - as an example:

Event: In the wake of repeated misinformation crises, governments declare strategic knowledge (market forecasts, technology trends, ESG analytics) a regulated utility, akin to electricity or water. Research is centralised into public–private entities.

Try This Today (15 minutes) Pick one strategic decision you're facing. Ask your LLM: "What are three surprising ways this could backfire in 5 years?" Then ask: "Now create a news headline from 2030 about each failure." The results will reshape how you think about risk.

Your Scenario Planning Starter Kit

Ready to try this yourself? Here's your practical roadmap:

Start Small: Pick a specific decision or challenge, not your entire corporate strategy

Use the STEEP Framework: Social, Technological, Economic, Environmental, Political - it works whether to drive strategic insights or flush out specific risk considerations.

Iterate Ruthlessly: Your first scenarios will be generic. Push the AI to go deeper, weirder, more specific.

Make It Visceral: Don't stop at descriptions. Generate artifacts, narratives, day-in-the-life stories.

Test Everything: Run your current plans or risk strategies through each scenario. Where do they break?

The Bottom Line: From Fortune Tellers to Fortune Makers

We started with Shell seeing the oil crisis coming. But here's the real lesson from that story: Shell didn't predict the future. They prepared for multiple futures. When one of those futures arrived, they were ready.

Today's LLMs don't give us crystal balls. They give us something better - the ability to explore hundreds of potential futures at the speed of thought. To test our strategies against possibilities we'd never imagine in a conference room. To turn scenario planning from an expensive luxury into an everyday capability.

The organisations that master this won't just be resilient. They'll be antifragile - getting stronger from uncertainty rather than just surviving it.

As the strategist Herman Kahn said, we need to "think the unthinkable." The difference now? We have AI to help us think the unthinkable, the unlikely, and the transformational - all before our morning coffee gets cold.

"But Can AI Really Replace Human Insight?" It can't and shouldn't. AI amplifies human judgment - it doesn't replace it. Think of it as having 100 brilliant advisors who never sleep versus 5 exhausted executives in a conference room. Which team explores more possibilities?

In Part 2 of the blog I will explore advanced techniques for getting the most of the scenarios that are generated through this process. These techniques compound the effectiveness and the impact of the scenario process itself.

Until next time, you'll find me at the weekend, asking Claude to dream up scenarios where my scenario planning becomes obsolete...

Resources and Further Reading

Historical Context:

Kahn, H. (1984). Thinking About the Unthinkable in the 1980s. Simon & Schuster.

Schwartz, P. (1991). The Art of the Long View: Planning for the Future in an Uncertain World. Doubleday.

Shell International. (c. 1970s). Internal Scenario Planning Documents. Shell Archives. (Note: Specific documents are accessible through the corporate archives).

Modern Application:

Govindarajan, V. (2016). The Three-Box Solution: A Strategy for Leading Innovation. Harvard Business Review Press.

Ramirez, R., & Wilkinson, A. (2016). Strategic Reframing: The Oxford Scenario Planning Approach. Oxford University Press.

AI Integration:

Anthropic. (n.d.). Guide to Using Claude for Strategic Planning. Anthropic. (Note: This refers to documentation and articles available on Anthropic's official website).

Boston Consulting Group (BCG) Henderson Institute. (2024). Augmented Strategic Planning. BCG Publications.

Comments