From Maker's Identity to Curator's Identity: How Professionals Can Survive The Shift That's About to Hit

- Adrian Munday

- Nov 16, 2025

- 6 min read

It's 3:40pm on a Wednesday and I'm reviewing a scenario that I supposedly wrote. The model produced it in seconds – clear structure, covers the key risks, even the right naming conventions. My actual contribution? Removing a risky assumption, tightening an edge case, and rewriting one line.

Functionally, the scenario works. Organisationally, it's a win.

But psychologically, something feels off.

Because the quiet question underneath the cursor is: if I didn't really build this, what exactly did I do?

More people are about to live in that moment, not once, but constantly, as AI starts producing the visible artefacts of knowledge work: the code, the model, the scenario, the analysis. And the impact isn't technical. It's existential.

And that’s what I'm going to cover in this blog. A topic that I believe is one of the most important of this Big Questions series in determining whether we thrive or merely survive in this era of AI (my passion when it comes to AI topics - human first).

Today I'm going to propose a framework that will help professionals to survive this shift. This shift isn't job loss - it's identity loss. Speaking to friends and former colleagues, it's the sense of growing dissonance they've felt over the last couple of years but can’t articulate.

I will talk about four competencies that actually become more valuable in an AI-accelerated environment.

Finally, I propose a practical playbook for rebuilding your professional identity so you don’t get left behind psychologically while staying ahead technically.

Because if you work in tech, finance, risk, engineering, analytics, strategy, consulting, or any domain where “outputs” used to be your proof of expertise, you’re about to feel this shift in your bones.

And if you don’t navigate it consciously, this next phase of AI won’t burn out your job - it’ll burn out you.

AI isn’t replacing your work. It’s replacing the parts of your identity you used to mistake for your work.

And that is far more destabilising than automation.

Most people focus on skills, tools, and workflows.

But the failure point isn’t competence.

It's the gap between what your job now requires and the story you still tell yourself about what makes you valuable.

With that, let's dive in.

The Invisible Shift: Your Job Still Exists, But Your Old Self Doesn't

For two decades, the prestige economy of knowledge work was built around construction. You were valued because you made things.

Developers wrote code. Analysts crafted models. Risk teams authored scenarios and insights. Engineers built systems by hand.

These outputs were the receipts of competence. They proved you were good.

Generative AI dissolves that link. You still have outputs, but now the machine produces the part that used to anchor your identity.

It’s a bit like suddenly discovering that your grandmother’s secret recipe comes straight from the back of a packet. The dish still tastes good, you just don’t know who you are anymore while eating it.

Research backs this up. Studies from 2022 through 2025 show that workers experience professional identity threat not when jobs disappear, but when the visible part of their craft becomes automated. Even when people remain essential, they feel less essential. Think I'm exaggerating?

This post from @lucas_montano shows this effect is already here for some. And I didn't have to go looking far in the research for this blog. This was one of many posts I could have included where people reference this dissonance in one form or another

In this reality, your job is safe, but you don't feel significant. That's the identity gap opening.

The Status Ladder Collapses

Status in technical fields has always been tied to production. Shipping. Building. Creating.

But when AI handles the first pass at code, analysis, risk assessments, documentation, scenarios and dashboards, your role shifts from maker to curator.

Curation is high-skill work. But culturally, we don't see it that way. We unconsciously believe effort is a proxy for virtue. I have worked with many people over the years that still assume the most exhausted person in the room must be the most valuable.

This is the same tension that editors, code reviewers, senior clinicians, and model validators have lived with for years. Their craft is judgement – choosing what not to ship, what not to trust, what not to escalate.

Yet society glamorises the person on stage, not the person deciding what deserves the stage. In AI-era work, this bias becomes systemic.

Organisations treat curatorial labour as overhead ("middle management" as a derogatory term is a good example). People internalise that story. Identity drags behind reality.

Why Curatorial Identity Is the New Centre of Gravity

But curation is not derivative work.

In complex systems – finance, engineering, healthcare, cybersecurity, aviation – the person who decides which signals matter controls the system.

This is why researchers increasingly focus on delegation of judgement as the core risk in AI-assisted work. Whoever shapes the framing shapes reality. This is why context engineering quickly overtook prompt engineering as the key skill to master.

When machines generate abundant options (or abundant AI slop at its worst), the scarce skill becomes selecting what's trustworthy, spotting subtle contradictions, escalating weak signals, discounting elegant but unsafe outputs, integrating conflicting model narratives, understanding constraints the model cannot see, and deciding when to override with human intuition or experience.

This is the work that makes organisations resilient. But it's historically invisible. And what's invisible feels unimportant, even to the person doing it.

That's the identity trap.

The Four Competencies That Survive Automation

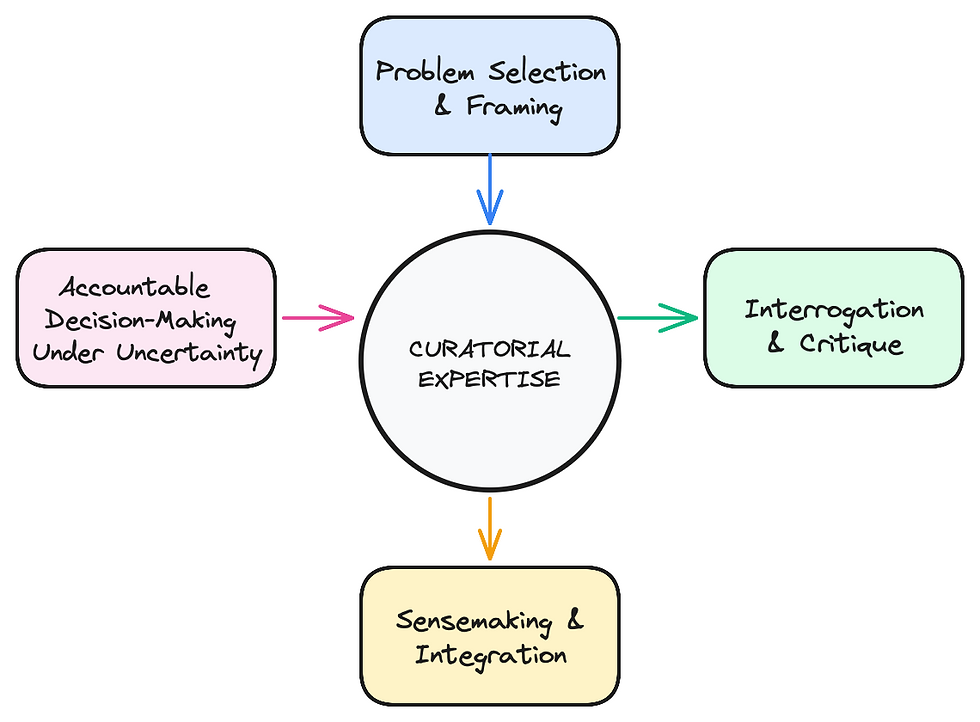

Across research and across industries, the same pattern emerges. When AI takes over production, the remaining high-impact human work clusters around four capabilities:

1. Problem selection and framing - Models can flood you with answers. They can’t decide which problems are worth solving, for whom, and under what constraints. Choosing where to point the machine, and how to define “good” in that context, remains the core differentiator.

2. Interrogation and critique - The job isn’t to rubber-stamp model outputs; it’s to stress-test them. That means probing assumptions, comparing alternative runs and tools, looking for missing data, and deliberately trying to break the answer before reality does.

3. Sensemaking and integration - Models produce fragments: passages of text, snippets of code, options, scenarios. Humans weave those into coherent systems – a design, a policy, a risk scenario, an operating plan – that fit organisational reality, constraints, and culture.

4. Accountable decision-making under uncertainty - Ownership of consequences still sits with humans. Someone has to weigh imperfect information, apply values and ethics, take the political risk of choosing, and then bring other humans with them. Identity forms where responsibility accumulates.

These aren’t the only enduring human advantages – relational trust, leadership, and deep domain craft still matter enormously – but most of them sit on top of, or feed into, these four. Together, they form the spine of curatorial expertise: not producing more artefacts, but deciding what matters, what holds, and what you’re willing to put your name on.

These four skills are the equivalent of knowing which wine goes with which meal: technically optional, socially decisive.

The Path Forward: Rebuild the Identity, Not Just the Skillset

Tools change instantly. Identities don't. The transition from maker to curator requires conscious reconstruction.

Make your invisible work visible. Decision logs, rationales, pattern notes help you see your own judgement. Start keeping a simple log of AI outputs you've rejected and why. It's clarifying.

Study the masters of curation. Editors, staff engineers, model validators, showrunners, risk committees. Different fields, same cognitive patterns. I once had the good fortune of spending a couple of hours with David Heyman, the producer of the Harry Potter series of movies. Amazing to hear how he approaches his work of curation.

Build rituals that honour curation. Starting in 2026, Meta will be assessing employee performance partly on their "AI-driven impact". In the same way, you can hold weekly "AI decisions worth discussing" sessions. Retrospectives focused not on output, but how you are partnering with AI to deliver.

Rewrite job descriptions for reality. If leadership doesn't name the shift, people will feel the loss without understanding the gain.

Solve the identity gap and adoption accelerates. Ignore it and you get burnout wrapped in high productivity metrics.

The trick is to treat judgement as a design feature, not a defect. Having flown back from Singapore yesterday (as I update this draft), it is much like how the best airlines or hotels make the things they can’t automate feel like luxuries.

The Bottom Line

When an AI system hands you something impressive – a model, a scenario, a block of code – you'll feel the old identity flinch.

The instinctive question is: if the machine can do this, what's left for me?

But the identity-preserving question, the one that builds the future, is: what am I bringing to this decision that the model cannot?

That answer is your new craft. It's also your new status. And your new identity.

This may feel some way off to many of you, but when it begins in your field, the shift will happen quickly.

Until next time, you'll find me at my desk, documenting the decisions I made today that no AI could have made for me...

Comments