From Individual Productivity to Collective Stupidity

- Adrian Munday

- Nov 23, 2025

- 6 min read

It's 8:17am on a Tuesday in late 2026, and I'm staring at five risk assessments that supposedly came from my team. Every one is immaculate. Clean structure. Clear logic. Even the right references to PRA SS1/21 and MAS TRM. The strange part is how completely different they are. Five analysts, five AI-assisted drafts, five valid interpretations of the same scenario - but no common spine.

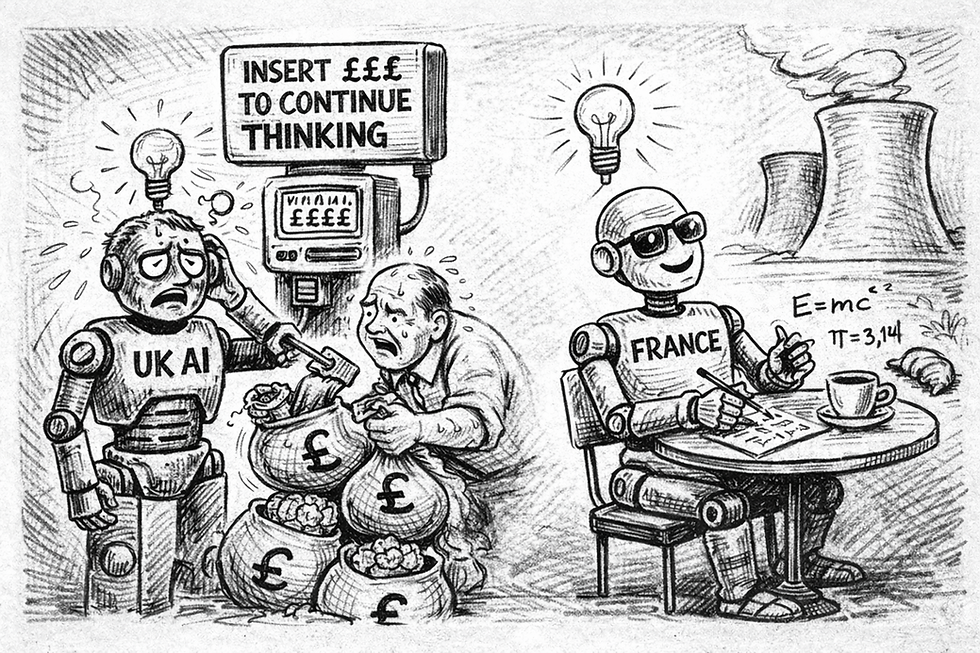

Before AI, this sort of divergence took weeks. Now it takes minutes. The work isn't bad. It's too good, in too many directions at once. And as I scroll through the pages, a simple question cuts through the noise: If everyone is individually faster, why is the team collectively slower?

That's the moment many organisations are about to stumble into. Not a collapse in quality. Not a shortage of ideas. The opposite. A surge of parallel outputs that multiplies the coordination load until the system strains under its own abundance. A 50 page report used to be an indicator of effort. Now it’s a warning flag.

Here's what we're going to explore: why uncoordinated AI use creates a specific organisational failure mode, why the mathematics of coordination break when individual capacity scales exponentially, and how leaders can build systems that prevent the slide from coherence to chaos.

So where does that leave us? AI increases creation faster than humans can increase understanding. That gap, between what's produced and what's collectively absorbed, is where teams become individually smarter but collectively dumber.

With that, let's dive in.

The Mathematics of A Coordination Catastrophe

The numbers are simple. When individual capacity increases without corresponding coordination mechanisms, the integration burden grows exponentially, not linearly. A team of five people, each now capable of 3x their previous output, doesn't create 15 units of neatly packaged progress. They create 15 units of material that now need to be reconciled into a coherent whole. This is backed up by recent research:

MIT Sloan (July 2024), Harvard/BCG (Feb 2025 follow-up): Studies showing that AI-assisted writing increases variance in reasoning structures, not just volume with more divergent cross-team conclusions

The problem is that AI amplifies individual capability, but coordination happens between humans, and those humans are about to be managing vastly more information flow. Think of it like upgrading every car on a road to go twice as fast without changing the traffic lights, road signs, or roundabout design (‘intersections’ in other parts of the world...). Individual capacity is increasing dramatically, but the coordination infrastructure remains unchanged.

Before AI, productivity was limited by typing speed, cognitive stamina, and the slow grind of human analysis. Teams naturally moved at the speed of their slowest integrators. It wasn't elegant, but it kept coherence intact. Coordination happened by necessity.

AI changes that dynamic entirely. Once you remove the creation bottleneck, output becomes exponential. A single analyst can generate ten scenario variants, five remediation paths, and a fully drafted committee paper before they've even had their first coffee.

The Hidden Cost: Shared Context Crisis

What we're witnessing is a ‘coordination debt crisis’. Coordination debt is the accumulated cost of integrating individually correct but contextually incompatible AI-generated outputs. Every piece of AI-generated output carries implicit context: the prompt used, the examples referenced, the assumptions embedded. When you create content independently, you maintain that context in your head (and in your prompt history). But when five people do this simultaneously, the implicit context diverges silently and catastrophically.

The problem is variation. AI doesn't produce one canonical answer; it produces many possible answers, each defensible, each coherent, each plausible. That flexibility is brilliant for individuals and brutal for teams.

Consider what happens in practice. Sarah uses ChatGPT to draft a market analysis, feeding it last quarter's data and asking for growth projections. James uses Claude to write a competitive landscape, providing different contextual framing about market positioning. Maria generates a strategic recommendation using Gemini, with yet another set of assumptions about customer priorities. Each output is internally coherent. Combined, they're contradictory.

In my own world of operational risk, the real value of much of what we do (apologies for the overused cliché) is the journey, not the destination. It is the scenario workshop not the scenario itself. The people in the room get to wander into the darkest corners of how a process, project or strategy can go wrong. Everyone walks away with a visceral, earned understanding of the challenge ahead.

It's easy to see how this morphs, if we’re not very mindful, into a world of AI-assisted risk models that are technically correct but structurally incompatible and don't translate into action. The models don’t disagree. They simply didn't align. One used a Bayesian network. A second leaned into scenario-driven narratives. Individually strong. And none socially constructed and collectively understood. In fact, collectively incoherent.

It's like five musicians playing different songs in the same key. Harmonious in theory. Chaos in practice.

The traditional solution of more meetings doesn't scale. You can't coordinate implicit context verbally when the volume of output has increased 3-5x. The conversation would never end. Some teams will try documentation: "Everyone must record their prompts and assumptions." This works until the documentation itself becomes overwhelming. Now you're not just reconciling outputs; you're reconciling documentation about the process of creating outputs.

Winners Build New Infrastructure

The risk here is that organisations end up optimising for the wrong thing. They celebrate individual productivity gains whilst ignoring the mounting coordination debt. It's like praising everyone for filling buckets faster whilst the ship sinks from all the displaced water.

I firmly believe the organisations that will dominate the next five years aren't the ones with the best AI tools. They're the ones solving for shared context at scale. This requires fundamentally different infrastructure: not better meeting schedules or more detailed documentation, but systems that maintain coherence as individual capacity scales.

What does that look like? Some possibilities are emerging. Teams using shared AI workspaces where every generation is visible to everyone, with prompt history and decision trees accessible. Opportunities exist for implementing "context APIs" where key assumptions and frameworks are codified and can be programmatically referenced.

The pattern that emerges from the organisations that will get this right: they will constrain the creative surface area. Most people assume AI requires more freedom. In reality, it requires tighter boundaries. Shared templates, canonical structures, and standardised prompts act like guard rails. They reduce variation and increase comparability.

They shift from document-centric to model-centric approaches. Documents fragment. Models unite. When teams operate from shared taxonomies, shared scenario libraries, or shared risk structures, AI becomes an amplifier of the same underlying model rather than a generator of novel ones.

And coordination gets built into the workflow, not the meeting schedule. The teams that succeed embed alignment into the creation process, not the end of it. They use verification agents, cross-comparison tools, and AI assistants that highlight divergence early.

The metaphor here is simple: instead of everyone writing their own map and comparing them afterwards, you create a shared map and let everyone extend it in controlled ways.

The Bottom Line

The real challenge is architectural. We're not yet in a position to prescribe specific solutions because most organisations are still discovering the problem. What's becoming clear is that the coordination infrastructure needs fundamental redesign, not incremental improvement.

Think about what needs to change at the process level. When individual output scales 3-5x, the review process itself becomes the bottleneck. Some organisations will need to move from sequential review cycles to something closer to continuous integration, borrowing concepts from software development where code is constantly merged and tested rather than developed in isolation and reconciled later.

At the organisational level, roles are going to shift. The person who's brilliant at individual analysis but creates outputs that can't be integrated becomes a liability, not an asset. We'll need people whose primary skill is maintaining coherence across high-volume AI-generated material. That's not a traditional project manager role or a traditional analyst role. It's something new. Ultimately the trend over the last few years of building horizontal shared services might need to be reversed into verticalisation. An integrated product team or capability (possibly to an organisational unit of one person with many agents) is the ultimate conclusion of removing co-ordination friction.

And at the technology level, we're probably going to need coordination tooling that doesn't exist yet. Not collaboration tools as we have plenty of those. Coordination tools. Systems that make divergence visible early, that enforce shared context, that prevent teams from accidentally working in incompatible directions. The winners will be the organisations that build or buy these capabilities before their competitors realise they need them. A Github for non-programmers?

The hardest part is going to be accepting that individual productivity without collective coordination is worse than useless. It's actively destructive. That brilliant analysis you generated in fifteen minutes? If it can't be integrated with what your colleagues are producing, you've just created expensive noise.

The organisations that thrive won’t be the ones who create the most. They’ll be the ones who integrate the fastest.

Until next time, you’ll find me thinking about how to build a shared map of the world and the required tools to navigate…

Comments