Behavioural Surplus 2.0: Who Controls Your Proof of Human?

- Adrian Munday

- Nov 9, 2025

- 6 min read

This blog is Part 1 of the Big Questions series.

It's 7:15am on a Sunday in 2027 and I'm just trying to log into a risk platform.

Instead of the usual password box, a new message appears:

"To keep your account safe, we'll analyse your typing patterns and mouse movements to confirm you're human. This will only take a moment."

This isn't a CAPTCHA with blurred traffic lights.

This is how long I hold each key, the gaps between my keystrokes and the tiny tremors in my mouse movements. Every micro-gesture, measured. All to prove I'm not a bot.

Welcome to the authenticity wars.

But let's back up. Why are we here?

Because the ground beneath online trust has shifted. AI can now generate photorealistic faces, convincing voices, and entire video scenes that never happened. Bots can pass most traditional CAPTCHAs. Deepfakes spread faster than fact-checks. And automated accounts (some benign, many not) now outnumber humans on parts of the web.

The consequences aren't abstract. Elections swing on viral misinformation. Financial fraud scales through automated impersonation (versions of which was the original inspiration for this blog back in June). Customer service drowns in bot traffic. And increasingly, we simply can't tell if the person (or "person") we're talking to online is real.

The old markers of authenticity have failed. Verification badges can be bought. Profile photos can be AI-generated. Even video calls can be faked in real time. We need new ways to prove someone is human.

So we're building infrastructure to answer two questions that once seemed absurd:

Is this content real?

Is this interaction human?

On paper, that sounds sensible. A necessary defence against an AI-saturated web. In practice, it will quietly reshape how we feel about everything we see and share online, and who gets to participate.

With that additional context, let’s dive in.

The New Authenticity Stack

Behind the scenes, a three-layer 'authenticity stack' is emerging:

1. Biometric identity – projects like World (formerly Worldcoin) scan irises to create cryptographic 'proofs of personhood.' Millions have joined, and regulators are circling.

2. Behavioural biometrics – typing rhythm and mouse paths form a behavioural fingerprint, used for silent background checks.

3. Content credentials – the Coalition for Content Provenance and Authenticity (C2PA) standard lets creators attach signed 'nutritional labels' to media showing when, how, and by which tool it was made.

Together these promise a safer web: fewer bots, clearer labels, more traceable news.

Governments and agencies such as the NSA are already backing them through standards development and guidance. So far, so reassuring.

But authenticity infrastructure isn't neutral. It shapes our behaviour. It changes what we notice, what we skip, and who we trust.

Training the System That Watches You

Here's the first paradox.

Every time you 'prove you're human,' you also train the model judging you (whether that's CAPTCHA or behavioural models). They learn not just to spot you, but to define what counts as 'normal' behaviour across millions of people.

Content credentials follow the same logic: they flag AI manipulation while producing vast datasets on how humans create and share.

The bottom line is we're not just using authenticity systems; we're training them. That's the 'Behavioural Surplus 2.0' moment: we've moved from harvesting clicks and likes to harvesting the patterns of our humanness itself. Having that digital essence captured is not like a password. It becomes another vector of compromise. And once its compromised you can't just change it to another complex password. There are well established mechanisms for controlling for biometric identity techniques, but the behavioural and content regimes are less well established. I'm sure this is an area I will dig further into in the future.

When Everything Has a Label (Except What Matters)

Consider the top layer: content authenticity.

In theory, the future looks straightforward where news photos carry a small icon showing capture time, camera, and edits. AI videos come with a clear 'synthetic' label. Social posts include provenance cards like 'Taken by X, edited in Y, published by Z.'

And some of this already exists.

Tech and media companies are lining up behind C2PA and the Content Authenticity Initiative (CAI), embedding and verifying these credentials across the web.

But in reality, most platforms strip or hide that data.

To test this, the Washington Post created an AI video with proper C2PA labels. They uploaded it to eight major platforms: Facebook, TikTok, Instagram, X, LinkedIn, Pinterest, Snapchat, and YouTube.

Only YouTube displayed a warning. Even that notice was hidden in the description.

So we're heading for a world where we could know more about what we see, but only if:

• the creator added credentials,

• the platform kept them,

• the interface showed them, and

• we remembered to look.

That's a lot of ifs before breakfast...

The Psychology of 'Authentic Enough'

Authenticity tools aim to rebuild trust, but they may only change the 'texture of doubt'.

1. From 'Is this true?' to 'Is this labelled?': As AI images become photoreal, badges replace judgement. We check labels instead of thinking, then stop checking at all. Trust shifts from people to icons few end up understanding.

2. Authenticity theatre: Under pressure, firms will deploy the equivalent of the scourge that is cookie banners: accurate but meaningless disclaimers.

'AI may have been used.'

Boxes ticked. Understanding lost.

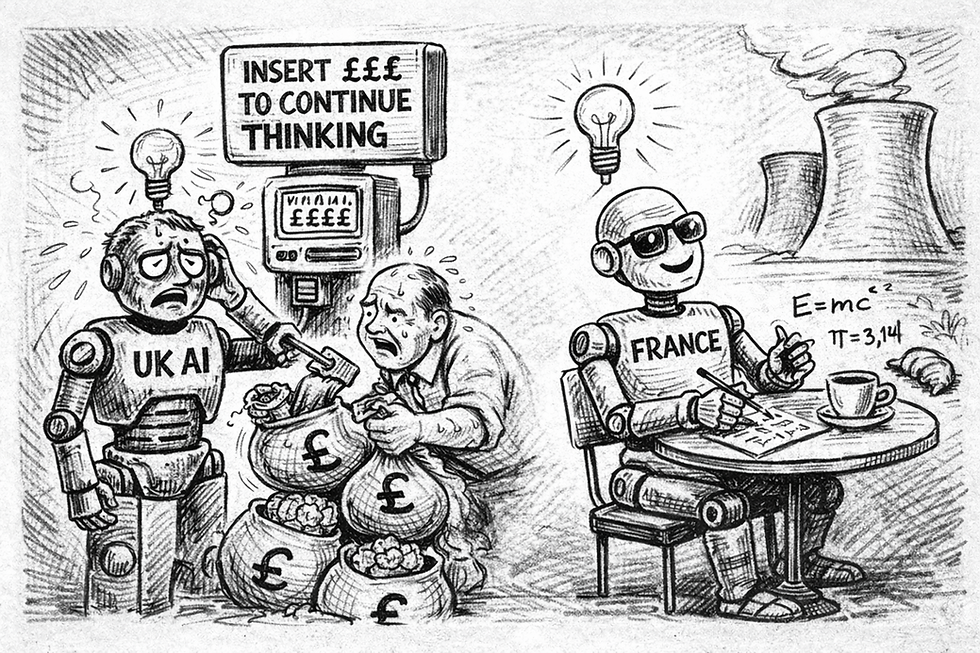

3. Unequal authenticity: Big newsrooms can afford verification pipelines; independent creators and activists are far less likely to be able. The result: a two-tier internet. 'Signed and trusted' versus 'unsigned and suspect'.

The internet once democratised information. AI may quietly bring the gatekeepers back.

How This Changes the Way We Engage Online

So how does this shift affect how we move through the web? I think it shifts us along three lines:

1. From passive feed to provenance-aware scrolling

A healthy information diet means noticing what we see and what's missing. Check and click provenance icons on emotional content. We should treat unlabelled posts as unknown, not fake. Finally, we should ask, 'Who benefits if I share this?'

It's a light 'provenance reflex,' like a phishing check. Glancing at a sender before opening an odd email.

2. From 'authentic content' to 'authentic engagement'

Labels can't stop emotional hijacking. They don't fix outrage loops, polarisation, or midnight doomscrolling. A verified video can still fuel bias: 'It's certified, so my anger must be justified.'

The harder question: Is my reaction authentic, or engineered?

3. From 'I am the product' to 'my humanness is the product'

Behavioural data extends the old surveillance trade. Your clicks drove the ads you saw. Now we’re moving towards a world where your typing rhythm, scroll speed, device posture leads to your risk scores and engagement predictions

Useful for security, irresistible for marketing.

Without restraint, our behaviour becomes another asset class.

Living With It

Opting out of authenticity tools isn't realistic. In a world of cheap fakes and large-scale fraud, we need stronger systems. But we can still choose how we live with them.

As individuals

A few small habits go a long way:

• Notice the label – and its absence. If a platform never shows provenance, that's a signal in itself.

• Slow down for weaponised emotions. Anything that makes you furious, euphoric, or afraid deserves one extra check.

• Separate 'real' from 'right.' Authentic footage can still mislead or be stripped of context, as the BBC recently found out in their selectively edited Trump footage. Provenance is a starting point, not a stamp of moral truth.

As organisations

If you build products or publish content (or run risk teams!):

• Adopt provenance standards, but surface them clearly, not as buried tick-boxes.

• Don't treat 'AI-generated' labels as a shield from criticism. The experience still matters.

• Handle biometric and behavioural data like toxic waste: collect the minimum, protect it tightly, and never reuse it for growth tests.

The boundaries you set now will shape your culture later.

The Real Question

We're at an inflection point. The authenticity infrastructure we're creating will decide whether 'proof of human' becomes either a way to defend our focus and autonomy, or another channel to monetise them.

It will influence who we see as trustworthy, and whether we meet the web with cynicism, blind faith in badges, or something wiser in between.

So the question for this first Big Question blog isn't just:

'Can we tell what's human and what's synthetic?'

It's:

'When authenticity itself becomes a system, who does it teach us to trust – and who does it quietly teach us to ignore?'

Until next time, I'll be the one hovering over a tiny 'info' icon, asking whether that badge in the corner helps me see more clearly, or just makes me feel that way.

Comments